AI Interview Series #4: Explain KV Caching

Question: You’re deploying an LLM in production. Here's what you need to know.

What’s Happening

Alright so Question: You’re deploying an LLM in production.

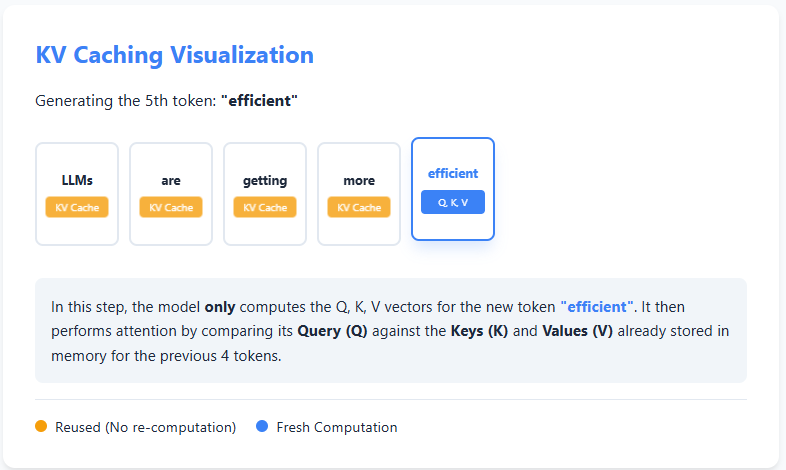

Generating the first few tokens is fast, but as the sequence grows, each additional token takes progressively longer to generate—even though the model architecture and hardware remain the same. (wild, right?)

If compute isn’t the primary bottleneck, what inefficiency is causing this slowdown, and how would you redesign the inference [] The post AI Interview Series #4: Explain KV Caching appeared first on MarkTechPost.

Why This Matters

The AI space continues to evolve at a wild pace, with developments like this becoming more common.

As AI capabilities expand, we’re seeing more announcements like this reshape the industry.

The Bottom Line

This story is still developing, and we’ll keep you updated as more info drops.

Are you here for this or nah?

Originally reported by MarkTechPost

Got a question about this? 🤔

Ask anything about this article and get an instant answer.

Answers are AI-generated based on the article content.

vibe check: