Nanbeige's 3B Model Hits 30B Reasoning Power

A small 3B model is challenging bigger LLMs, achieving 30B-level reasoning. Discover Nanbeige's significant training recipe.

✍️

vibes curator ✨

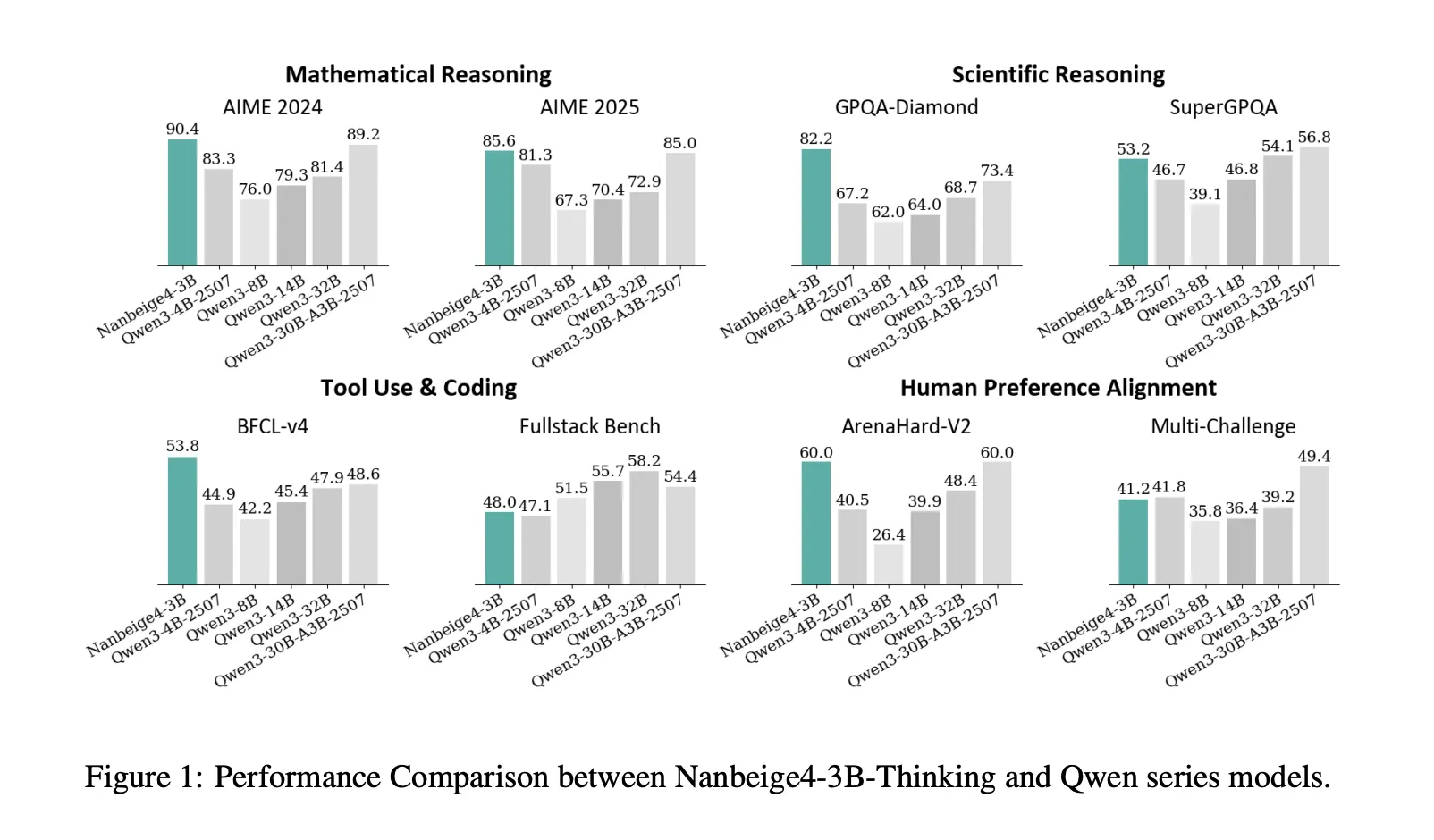

What’s Happening The Nanbeige LLM Lab at Boss Zhipin has unveiled Nanbeige4-3B, a compact 3B parameter language model. This small model is making waves by claiming to deliver 30B class reasoning capabilities, a feat usually reserved for much larger LLMs. They’ve achieved this not by scaling parameters, but by radically overhauling the training process. Nanbeige4-3B was developed using an intensive 23T token pipeline, focusing on a refined ‘training recipe’ that includes data quality, curriculum scheduling, distillation, and reinforcement learning. ## Why This Matters This breakthrough means advanced AI reasoning could become far more accessible and efficient. It challenges the industry’s belief that bigger models inherently mean better performance, opening doors for smaller, more specialized AI. For developers and businesses, this could drastically reduce the computational resources needed to deploy powerful language models. It democratizes advanced AI, making high-level reasoning available on less powerful hardware. - Significant cost reduction for AI development and deployment.

- Enables faster inference and easier integration into existing systems.

- Shifts focus from raw parameter count to innovative training methodologies. ## The Bottom Line Nanbeige4-3B represents a significant pivot in LLM development, proving that smarter training can outshine sheer scale. This development could reshape how we approach AI, making powerful models more efficient and widely available. Will this spark a new era where quality of training data and methodology consistently trump model size?

✨

Originally reported by MarkTechPost

Got a question about this? 🤔

Ask anything about this article and get an instant answer.

Answers are AI-generated based on the article content.

vibe check: