NVIDIA & Mistral AI: 10x Faster AI, Open Source Wins

NVIDIA and Mistral AI just dropped a bombshell: 10x faster AI inference for Mistral 3 models. Open source just got a serious hardware boost.

✍️

the tea spiller ☕

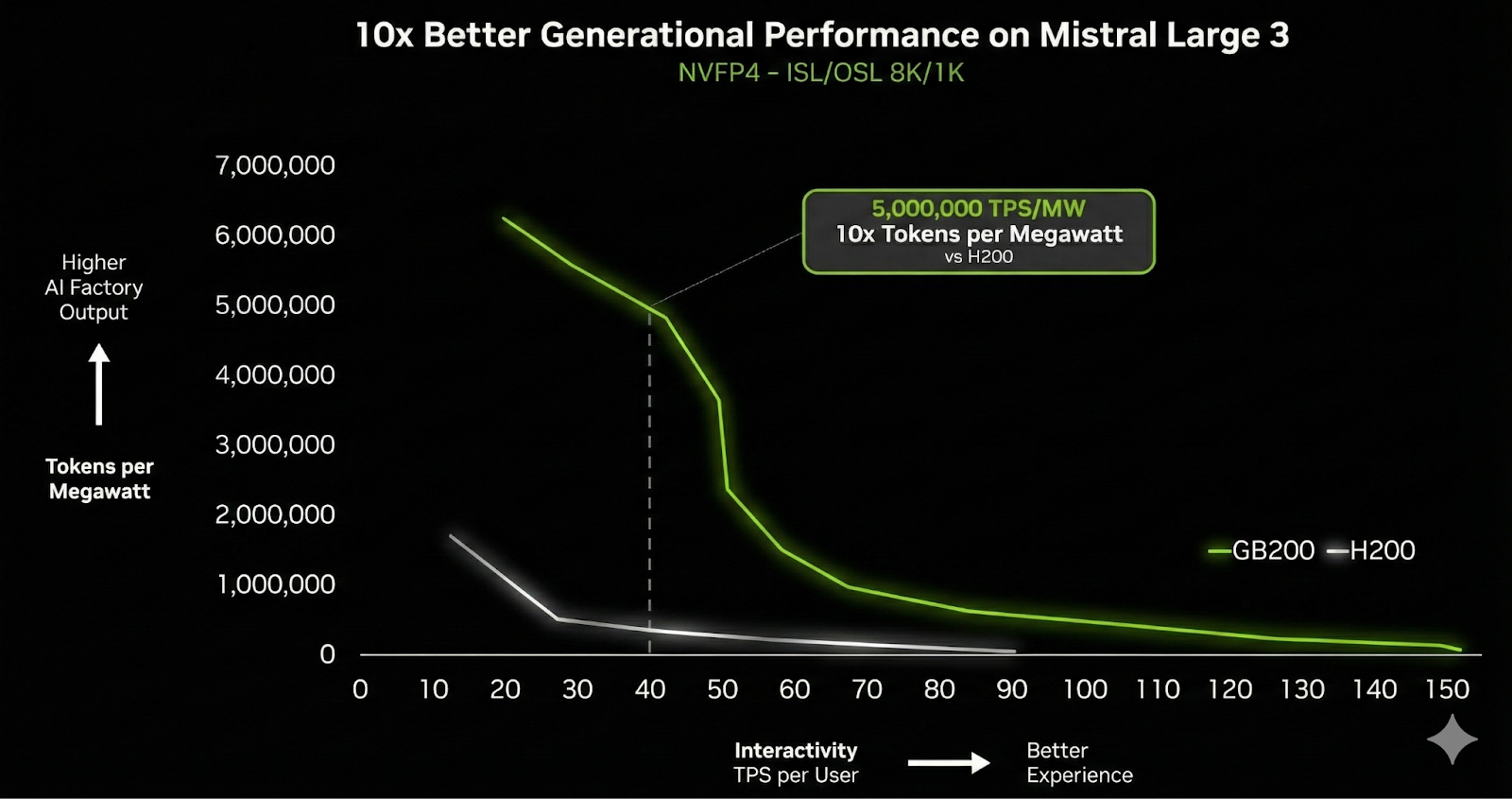

What’s Happening NVIDIA just announced a major expansion of its team-up with Mistral AI. This isn’t just a handshake; it’s a strategic collaboration hitting at a key moment for AI development. They’re rolling this out alongside Mistral AI’s new Mistral 3 frontier open model family. The big news? We’re talking about a massive 10x leap in inference speed when running Mistral 3 models on NVIDIA’s GB200 NVL72 GPU systems. ## Why This Matters This partnership is a game-changer because it perfectly blends cutting-edge hardware acceleration with open-source model architecture. It means developers and businesses using Mistral 3 can expect unprecedented performance levels. The ‘10x faster inference’ isn’t just a number; it translates directly into more responsive AI applications and significantly reduced operational costs. This kind of speed redefines what’s possible for deploying large language models efficiently. - It democratizes access to high-performance AI, making powerful models more accessible.

- Businesses can deploy more complex AI solutions faster, improving user experience.

- It pushes the envelope for open-source AI, proving it can compete at the highest levels. ## The Bottom Line Ultimately, this collaboration between NVIDIA and Mistral AI sets a new benchmark for AI performance, especially within the open-source ecosystem. It signals a future where speed and accessibility go hand-in-hand, making advanced AI practical for a wider audience. Are we about to see an explosion of new, lightning-fast AI applications thanks to this?

✨

Originally reported by MarkTechPost

Got a question about this? 🤔

Ask anything about this article and get an instant answer.

Answers are AI-generated based on the article content.

vibe check: